|

|

Emanuele Rodolà

Intelligent Systems and Informatics Lab

The University of Tokyo

Room# 622 Eng.Bldg.6

7-3-1 Hongo Bunkyo-ku

Tokyo 113-8656

JAPAN

E-mail: rodola at isi dot imi dot i dot u-tokyo dot ac dot jp

Home | Publications | Software | Datasets | Videos

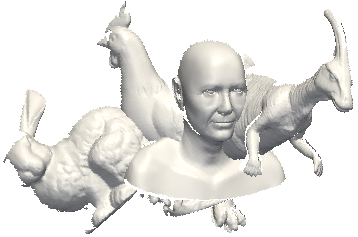

3D Object in Clutter Recognition and Segmentation

This dataset focuses on the recognition of known objects in cluttered and incomplete 3D scans. Fitting a model to a scene is a very important task in many scenarios such as industrial inspection, scene understanding and even gaming.

The dataset is composed of 150 synthetic scenes, captured with a (perspective) virtual camera, and each scene contains 3 to 5 objects. The model set is composed of 20 different objects, taken from different sources and then processed in order to obtain comparably smooth surfaces of almost uniform 100-350k triangles with an average resolution of 1.0.

In particular, the model set contains processed versions of:

- armadillo, bunny, dragon from the Stanford 3D scanning repository

- chef, t-rex, parasaurolophus, chicken, rhino from A. Mian's object recognition dataset

- cat1, centaur1, david2, dog7, gorilla0, horse7, lioness13, victoria3, wolf2 from TOSCA's non-rigid world

- face, ganesha respectively from M. Sala's face dataset and the Computer Vision lab in Ca' Foscari

- gun0026 from SHREC'11 retrieval dataset

DOWNLOAD

The collection of scenes in binary PLY format, together with ground-truth segmentation masks, can be downloaded HERE (148 MB zipped).

The set of models can be downloaded separately HERE (46 MB zipped).

The ground-truth rigid motions aligning each model to each scene are available HERE (62 KB zipped).

CITATION

The paper is under revision.

FORMAT

The model objects as well as the range scenes come as standard binary PLY files. However, if you have any problems loading them, please let me know and I'll be glad to help.

The ground-truth .txt files specify, for each scene, the number of models appearing into it and the rigid motion bringing each model to its scene position. Each model is described by a line containing the model name, the rotation matrix row-major, and the translation vector, i.e.:

[model name] [R00 R01 R02 R10 R11 R12 R20 R21 R22] [T0 T1 T2]

Each scene comes with a .segments file containing segmentation labels for each scene point, in the same order as they appear in the PLY file. The labels are simply integer numbers specifying the model objects to which scene vertices belong; each number refers to the order of appearance of the model in the ground-truth .txt file, starting from 1 (for example, the label 2 indicates the second model in the .txt). The label 0 can be safely ignored an must be skipped when processing the segmentation file. The initial header of each file has the following meaning:

[resolution] [z-gap] [total # vertices] [# models] [# vertices model 1] [# vertices model 2] ... [# vertices model N]

Finally, occlusion_clutter.txt contains ground-truth occlusion and clutter for each model in each scene, defined as follows:

occlusion = 1 - (visible object area) / (total object area)

clutter = 1 - (visible object area) / (total scene area)

NOTES

- This dataset is designed to be challenging. It contains many smooth (feature-less) as well as similar objects (e.g., david2 vs victoria3 or horse7 vs centaur1), that should provide enough room for false positives even under low levels of occlusion and clutter. Additionally, some scenes present intersecting surfaces, which will induce spurious descriptors and keypoints for feature-based methods.

- The scenes as they are have no additional sensor-like noise applied to them, in order to give more freedom of use in your experiments. To better simulate a scanning process, you might want to apply depth positional noise to the scene vertices.

- Ground-truth occlusion and clutter might be slightly under-estimated due to some models having a few internal vertices, which cannot be captured by any range image. However, the error is practically negligible.

- The gun0026 model has many internal vertices, and as such its occlusion/clutter estimate has a large error. You should also keep this in mind in case you're going to use surface descriptors expecting them to be invariant to the depth scan transformation of this model.

|

|

|

|